Unlock Potential: Efficient Prompt Experiment Tracking

Author : Kedar Supekar | Published On : 28 Mar 2024

Generative AI has sparked a wave of excitement among businesses eager to create chatbots, companions, and co-pilots for extracting insights from their data. This journey begins with the art of prompt engineering, which includes various approaches like single-shot, few-shot, and chain of thoughts. Businesses often start by developing internal chatbots to help employees gain insights and boost their productivity. Given that customer support is a significant cost center, it has become a focus for optimization, with the development of Retrieval Augmented Generation (RAG) systems for enhanced insights. However, if a customer support RAG system provides inaccurate or misleading information, it could bias the judgment of representatives, leading to misplaced trust in computer-generated responses. Recent incidents involving entities like Air Canada and a Chevy chatbot have highlighted the reputational and financial risks of deploying unguided chatbots for self-service support. Imagine creating a financial advisor chatbot that offers human-like responses but is based on flawed or imaginative information, opposing sound human judgment.

Challenge:

Often, prompt authors create numerous versions of a prompt for one task during the experimentation, which can become overwhelming. A significant challenge during this process is tracking the different prompt versions you're testing and the ability to manage and incorporate them into your Gen AI workflow.

Prompt Engineering for complex use cases such as Legal, Financial Advisor, HR advisor applications, etc., requires a lot of experimentation to ensure accuracy, quality, and safety guardrails. Although many prompt playgrounds exist, managing the prompt history comparison of large sets of experiments is still done offline using spreadsheets and entirely decoupled from Gen AI workflows, removing prompt lineage.

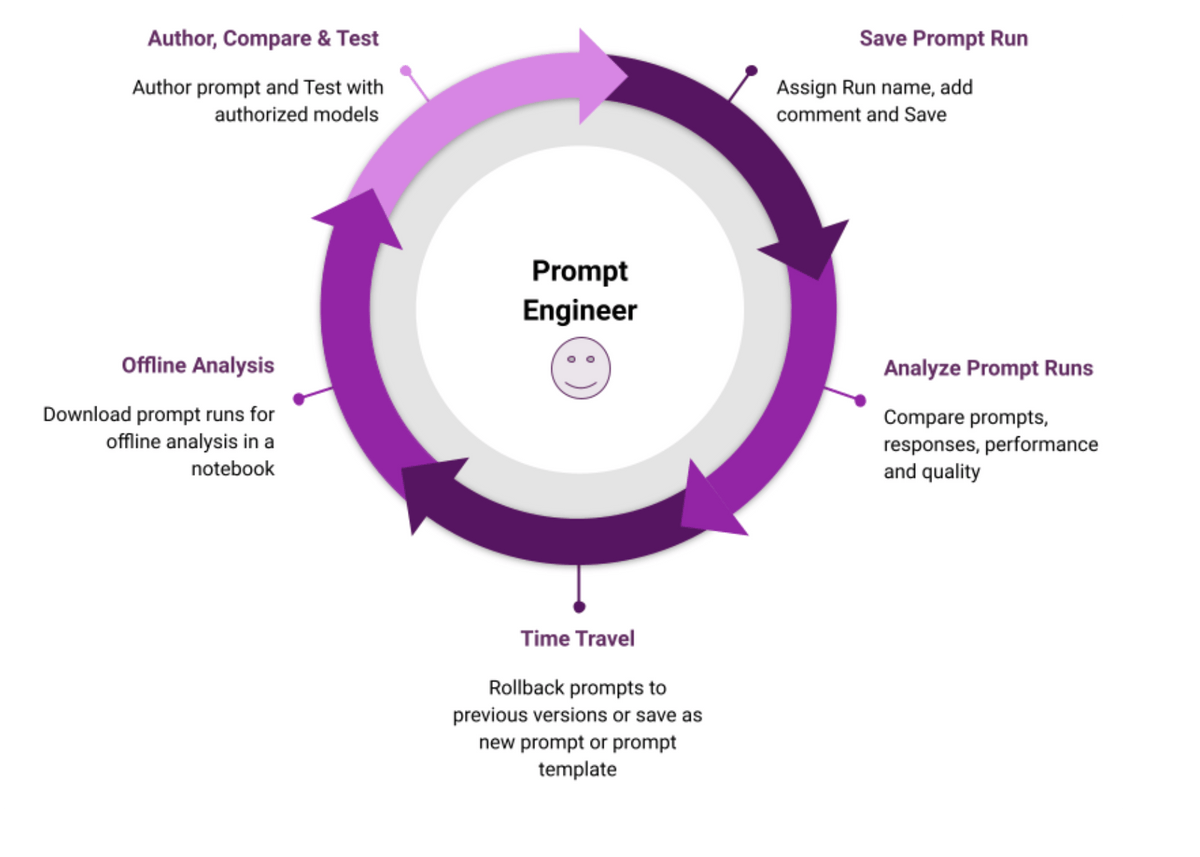

Prompt Engineering with Karini’s Prompt Playground:

Karini AI’s prompt playground revolutionizes how prompts are created, tested, and perfected across their lifecycle. This user-friendly and dynamic platform transforms domain experts into skilled prompt masters, offering a guided experience with ready-to-use templates for kickstarting the prompt creation. Users can quickly evaluate their prompts using different models and model parameters focusing on response quality, number of tokens, and response time to select the best option. Tracking prompt experiments has never been easier with the new feature to save prompt runs.

Using Karini’s Prompt Playground, authors can:

-

Author, Compare, and Test Prompts:

- Experiment with prompts by adjusting the text, models, or model parameter.

- Quickly compare the prompts against multiple authorized models for quality of responses, number of tokens, and response time to select the best prompt.

-

Save Prompt Run:

- Capture and save the trial, including the prompt, selected models, settings, generated responses, and token count and response time metrics.

- If a “best” response is chosen during testing, it’s marked for easy identification.

-

Analyze Prompt Run:

- Review saved prompt runs to enhance and refine your work.

- Evaluate and compare prompts for response quality and performance.

-

Time Travel:

- Revert to a previous prompt version by rolling back to a historical prompt run.

- Save a historical prompt run as a new prompt or prompt template for future experiments or to integrate into a recipe workflow.

-

Offline Analysis:

- Download all prompt runs as a report for comprehensive offline analysis or to meet auditing requirements.

Conclusion:

The main reason many generative AI applications fail to reach production is the issue of hallucinations and compromised quality. Prompt engineering is all about effectively communicating with a generative AI model. Crafting effective prompts is a dynamic process, not just a one-time task. Each variation in the design stage is essential, and needs to be managed throughout the prompt lifecycle.

With Karini's prompt playground and the prompt runs feature, authors can neatly organize and efficiently manage their experiments throughout the prompt lifecycle for the most complex use cases.

About Karini AI:Fueled by innovation, we're making the dream of robust Generative AI systems a reality. No longer confined to specialists, Karini.ai empowers non-experts to participate actively in building/testing/deploying Generative AI applications. As the world's first GenAIOps platform, we've democratized GenAI, empowering people to bring their ideas to life – all in one evolutionary platform.